AI Ethics: Navigating the Dilemmas

The Ethics of Artificial Intelligence: Navigating the Moral Dilemmas

Artificial intelligence (AI) is rapidly transforming our world, impacting everything from healthcare and transportation to entertainment and education. While the potential benefits are vast – increased efficiency, groundbreaking discoveries, and solutions to complex global challenges – this technological revolution isn’t without its ethical considerations. As AI systems become more sophisticated and integrated into our daily lives, it’s crucial that we grapple with the moral dilemmas they present. This post will explore some of the key ethical issues surrounding AI, examining potential risks and suggesting avenues for responsible development and deployment.

Bias and Discrimination in AI

One of the most pressing concerns is algorithmic bias. AI systems learn from data; if that data reflects existing societal biases – whether related to race, gender, socioeconomic status, or other factors – the AI will likely perpetuate and even amplify those biases. This can lead to discriminatory outcomes in areas like loan applications, hiring processes, criminal justice, and even medical diagnosis.

How does bias creep into AI? Several factors contribute:

* Historical Data Bias:** Training datasets often contain historical data that reflects past inequalities. For example, if a facial recognition system is trained primarily on images of white faces, it will likely perform poorly and be biased against people with darker skin tones.

* Sampling Bias:** The way data is collected can also introduce bias. If a dataset doesn’t accurately represent the population it’s intended to serve, the resulting AI model may be skewed.

* Algorithmic Design Choices:** Even seemingly neutral design choices made by developers can inadvertently embed biases into an algorithm.

Addressing this requires careful attention to data collection and curation – ensuring diversity in datasets and actively identifying and mitigating bias during development. Furthermore, ongoing monitoring and auditing of AI systems are essential to detect and correct for discriminatory outcomes.

Accountability and Responsibility

As AI systems take on increasingly complex tasks, determining accountability when things go wrong becomes a significant challenge. If a self-driving car causes an accident, who is responsible? The programmer? The manufacturer? The owner? Or the AI itself?

The “Black Box” Problem: Many advanced AI systems, particularly those based on deep learning, are often described as “black boxes.” Their decision-making processes are opaque and difficult to understand, making it challenging to pinpoint the cause of an error or assign responsibility.

Establishing clear lines of accountability is crucial. This may involve developing new legal frameworks that address AI liability, promoting transparency in AI design, and implementing mechanisms for auditing and explaining AI decisions. The concept of “explainable AI” (XAI) – which aims to make AI decision-making more understandable – is gaining traction as a potential solution.

Job Displacement and Economic Impact

The automation capabilities of AI raise concerns about widespread job displacement. While AI may create new jobs in areas like AI development and maintenance, it’s likely to automate many existing roles, potentially leading to unemployment and economic inequality.

Addressing the Challenge: This requires proactive measures such as:

* Reskilling and Upskilling Initiatives:** Providing workers with training in new skills that are complementary to AI.

* Social Safety Nets:** Strengthening social safety nets, like unemployment benefits and universal basic income, to support those displaced by automation.

* Focus on Human-AI Collaboration: Exploring ways for humans and AI to work together synergistically, leveraging the strengths of both.

Privacy Concerns

AI systems often rely on vast amounts of data – much of it personal information – to function effectively. This raises significant privacy concerns. Facial recognition technology, predictive policing algorithms, and personalized advertising all depend on collecting and analyzing sensitive data.

Data Security & Anonymization: Robust data security measures are essential to prevent misuse or unauthorized access to this data. Techniques like anonymization and differential privacy can help protect individual privacy while still allowing AI systems to learn from data, but these are not foolproof.

Regulations such as the General Data Protection Regulation (GDPR) aim to give individuals more control over their personal data and hold organizations accountable for how they use it. However, ongoing vigilance and adaptation of regulations are needed to keep pace with rapidly evolving AI technologies.

Autonomous Weapons Systems (AWS)

Perhaps one of the most profound ethical challenges posed by AI is the development of autonomous weapons systems – sometimes referred to as “killer robots.” These are weapon systems that can select and engage targets without human intervention. The prospect of machines making life-or-death decisions raises serious moral, legal, and strategic concerns.

Arguments Against AWS:

* Lack of Human Judgment:** Critics argue that AWS lack the capacity for nuanced judgment and empathy necessary to distinguish between combatants and non-combatants.

* Accountability Vacuum:** Determining responsibility in cases where an AWS causes unintended harm is extremely difficult.

* Escalation Risk: The deployment of AWS could lower the threshold for armed conflict and increase the risk of accidental escalation.

There’s a growing international movement calling for a ban on the development and deployment of fully autonomous weapons systems, emphasizing the need to retain human control over lethal force decisions. The debate is complex and ongoing.

Conclusion: Towards Responsible AI

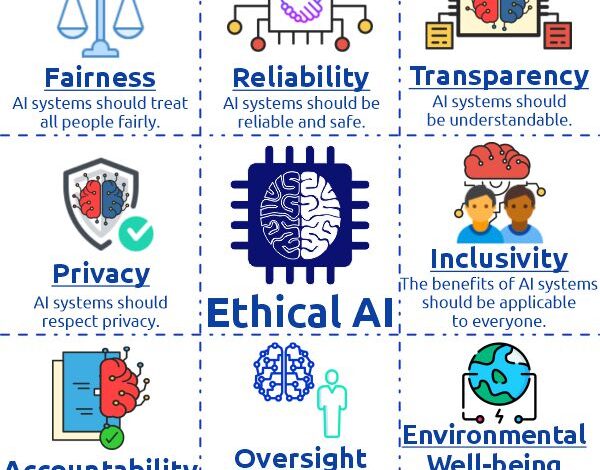

The ethical challenges posed by AI are multifaceted and demand careful consideration. Addressing these issues requires a collaborative effort involving researchers, policymakers, industry leaders, and the public. We need to develop:

- Ethical Guidelines & Frameworks: Clear guidelines for responsible AI development and deployment.

- Robust Regulations: Legal frameworks that address accountability, privacy, and bias.

- Promote Transparency & Explainability: Design AI systems that are understandable and auditable.

- Foster Public Dialogue: Encourage open discussion about the ethical implications of AI.

AI has the potential to be a force for good in the world, but only if we prioritize ethical considerations alongside technological advancement. By proactively addressing these moral dilemmas, we can harness the power of AI while mitigating its risks and ensuring that it benefits all of humanity.